By David Wiley, Chief Academic Officer

The potential of the “data revolution” in teaching and learning, just as in other sectors, is to create much more timely feedback loops for tracking the effectiveness of a complex system. In a field where feedback is already well established as a vital process for both students and educators, the question is how this potential can be realized through effective human-computer systems (Buckingham Shum and McKay, 2018).

Open educational resources (OER) are educational materials whose copyright licensing grants everyone free permission to engage in the 5R activities, including making changes to the materials and sharing those updated materials with others. Consequently, everyone who wants to continuously improve OER has permission to do so. (Not so with traditionally copyrighted materials, whose licensing allows only the rightsholder to alter and improve the content.) Permission to make changes is a necessary – but not sufficient – condition for continuous improvement.

In addition to permission to make changes, improvement requires a capacity for measurement. We can say we’ve changed OER without measuring the impact of those changes, but we can only say we’ve improved OER when we have measured student outcomes and confirmed that they have actually changed for the better.

Continuous improvement of OER, then, is the iterative process of:

- Instrumenting OER for measurement,

- Measuring their effectiveness in supporting student mastery of learning outcomes,

- Identifying areas where student mastery of those learning outcomes was not effectively supported,

- Making changes to the learning design of the underperforming OER aligned to those learning outcomes, and then

- Beginning the cycle again so we can:

- Measure the impact of those changes and determine whether or not they were actually improvements (not just changes), and

- Identify additional areas that need strengthening.

Engaging in the continuous improvement of OER in this manner allows us to make OER support learning more effectively each semester.

Learning Design and Continuous Improvement

Lumen instruments OER for measurement at the individual learning outcome level. Outcome alignment is at the very core of both our learning design process and our continuous improvement process. The outcome alignment process has three parts.

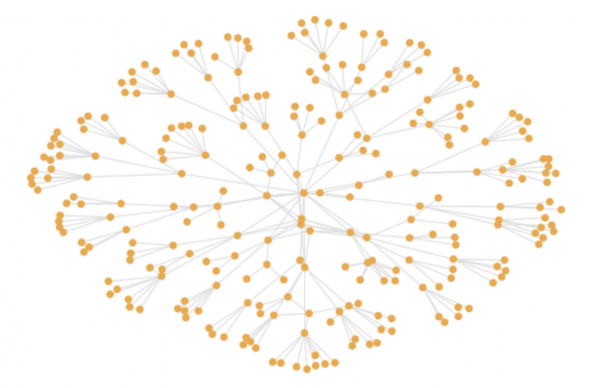

A visualization of the relationships between the more than

250 learning outcomes in Waymaker Microeconomics

First, we collaborate with faculty to identify each of the individual skills we want to support students in mastering. These detailed outcomes are, like all the content Lumen creates, licensed CC BY. Second, we align each individual page of content with the one or more outcomes whose mastery it supports. Finally, we align each assessment item with the outcome it is designed to assess. In the case of Waymaker Microeconomics, for example, that means aligning over 2,350 individual assessment items appearing in pre-tests, interactive practice opportunities, self-checks, and end of module quizzes with the appropriate learning outcome.

If that sounds like an incredible amount of work, that’s because it is!

But it’s worth it. In addition to providing benefits in the learning design process that we don’t discuss here, outcome alignment is fundamental to the continuous improvement process. With assessment items aligned to individual outcomes in pre-tests, practices, self-checks, and end-of-module quizzes, we can model learning over time, from the beginning of the module (the pre-test occurs before students see any OER) to the second attempt on the end of module quiz (after students have used and reused the OER). Similarly, because all course content is outcome-aligned, we can examine how patterns of OER usage correlate with performance on aligned assessments.

Analyzing the Effectiveness of OER

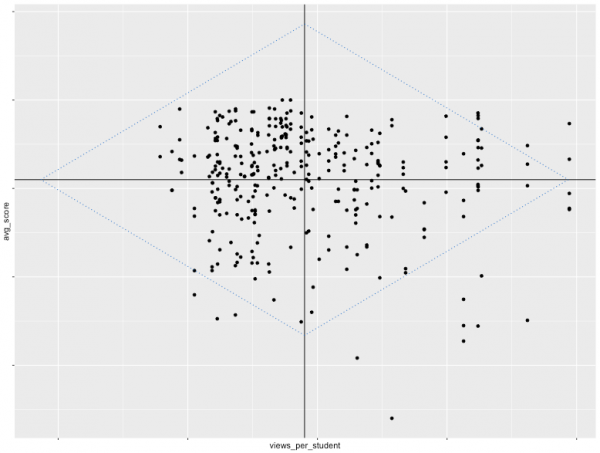

This process begins with a RISE analysis. I published the RISE framework last year with Bob Bodily and Rob Nyland, two amazing PhD students at BYU. Earlier this year I also published an open source implementation of RISE in the Journal of Open Source Software. RISE analysis divides performance on assessments into two categories, higher and lower, and usage of OER into the same two categories, higher and lower. These are matrixed to create four ways of diagnosing how OER are working in support of student learning.

| Higher Grades | High student prior knowledge, inherently easy learning outcome, highly effective content, poorly written assessment | Effective resources, effective assessment, strong outcome alignment |

| Lower Grades | Low motivation or high life distraction, too much material, technical or other difficulties accessing resources | Poorly designed resources, poorly written assessments, poor outcome alignment, difficult learning outcome |

| Lower Use of OER | Higher Use of OER |

Each outcome in the course is placed in one of these four categories, as in the visualization below. We focus first on those outcomes in the lower right corner, where usage of OER is high but performance on aligned assessments is low. These are places where effort invested in improving OER is most likely to improve student learning. Below we have drawn a blue diamond three standard deviations out from the origin (mean OER usage on the x-axis and mean assessment performance on the y-axis) to make it easier to visually identify outliers in need of immediate attention.

RISE analysis visualization of Introduction to Business

Making Targeted Improvements to OER

In the past, once the OER most in need of improvement were identified, we reached out to individual faculty to invite them to participate in the process of analyzing and improving course materials in collaboration with Lumen’s learning engineers and course designers. Moving forward, we will use the Learning Challenges Leaderboards to make this information public and invite the community to participate in the process of revising, remixing, finding, or creating new OER to better support student learning.

(In addition to continuously improving the OER based on outcomes data, we also make a wide range of other updates to our courses. For example, we update OER based on faculty feedback, current events, and the availability of new OER. We make improvements to assessments based on the results of item analysis, make improvements to features of the Waymaker platform (like faculty and student nudges) based on ways they correlate with student performance, and make improvements to supplementary materials based on faculty feedback.)

The Role of Learning Materials in Education

It would be easy to look at the effort Lumen invests in improving OER and other courseware components and come to the conclusion that we think learning materials are the most important part of education. That would be a mistake. We believe deeply that the contributions made by the learner and the faculty both significantly outweigh the importance of learning materials. However, we also believe that highly effective learning materials can dramatically amplify the efforts of learners and faculty. For example, we know that highly effective learning materials can help learners reach the same levels of mastery in half the time compared to materials that follow a traditional textbook design (Lovett et al., 2008).

There are myriad ways in which education needs to be improved. The role Lumen is choosing to play in the community working to improve education (which extends far beyond problems relating to learning materials) is to enable and empower learners and faculty with highly effective learning materials that become more effective every semester.

We’re working to engage a broad community of educators and institutions in the work of improving education by continuously improving OER course materials. We’re trying to make this complex task more transparent, measurable, and participatory. Given the creativity and commitment of the community we serve, we have every hope of success.